By a Legal Tech Observer

The most dangerous thing in Silicon Valley right now isn’t a rogue superintelligence. It isn’t a Chinese chip embargo. It is a judge in Delaware with a gavel.

We have spent the last three years building a multi-trillion-dollar industry on a single, shaky legal assumption: “Training on the internet is Fair Use.”

OpenAI, Google, and Anthropic have operated on the “Ask Forgiveness, Not Permission” model. They scraped the entire web—every book, every news article, every Reddit thread—and fed it into their models, assuming that US Copyright Law would protect them under the doctrine of transformation.

But in late 2025, the ice started to crack.

Following the shock ruling in Thomson Reuters v. ROSS Intelligence last February—where a federal court rejected the fair use defense for an AI training on legal summaries—the mood in the boardrooms has shifted from “Confidence” to “Panic.”

We are now staring down the barrel of the New York Times v. OpenAI trial, expected to reach a verdict this summer. If the courts rule that AI training is not fair use, the consequences will be nuclear.

Here is the legal autopsy of the AI industry, and why 2026 might be the year the free lunch ends.

1. The “Fair Use” Fantasy is Dead

For years, AI lawyers argued that LLMs were like humans. “If a human reads a library and learns to write,” they said, “that isn’t copyright infringement. Why is it different for a machine?”

The courts are finally answering: Because a machine is a product, not a person.

The ROSS ruling was the turning point. The judge essentially said: You cannot copy a competitor’s database to build a competing commercial product and call it “transformative.”

This destroys the defense for Generative AI.

- The New York Times argues that ChatGPT is a direct market substitute for its journalism. (Why subscribe to the Times when ChatGPT can summarize the paywalled article for you?)

- The Authors Guild argues that Claude is a substitute for fiction writers.

If the “Market Substitution” test fails, Fair Use fails. And without Fair Use, every single token these models were trained on becomes a separate count of copyright infringement.

2. The Nuclear Option: Algorithmic Disgorgement

Most people assume that if OpenAI loses, they will just pay a fine. “It’s the cost of doing business,” the VCs say. “They have Microsoft money.”

They are wrong.

The plaintiffs aren’t just asking for money. They are asking for Algorithmic Disgorgement.

This is a legal remedy used by the FTC (in cases like Cambridge Analytica and Weight Watchers) where the court orders a company to delete the model and any algorithms derived from the fruit of the poisonous tree.1

If the court rules that GPT-5 was trained on stolen data, OpenAI cannot just pay a fine and keep using it. They might be forced to delete GPT-5.

Imagine the chaos. Every startup building on the API, every enterprise contract, every workflow—poof. Gone overnight. The company would have to retrain the model from scratch using only public domain data (which would make it about as smart as a 1990s chatbot).

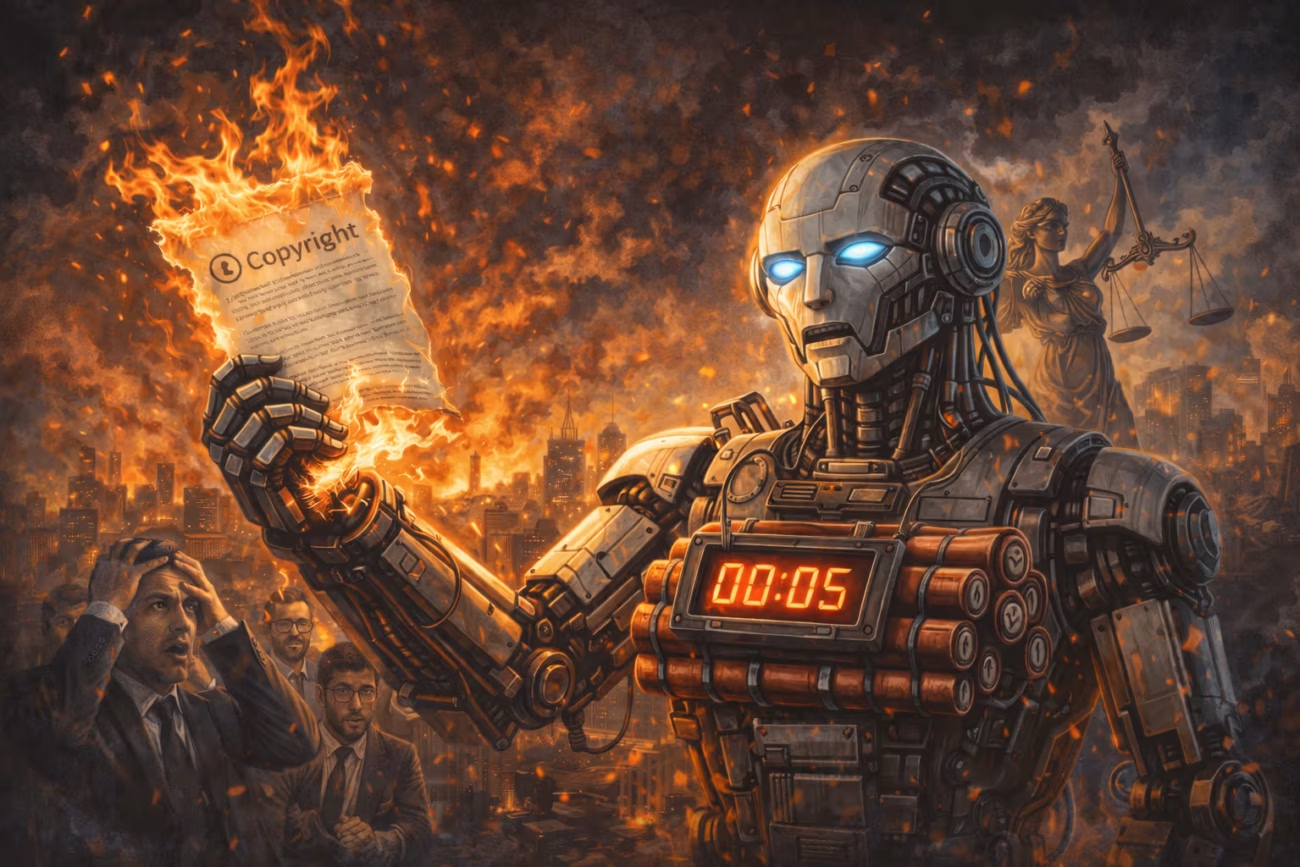

This is the “Legal Time Bomb.” It is the risk that the core asset of the world’s most valuable startup is actually illegal contraband.

3. The “Settlement Moat”: Buying the Law

OpenAI and Google know this. That is why they aren’t waiting for the verdict. They are furiously building a “Settlement Moat.”

Over the last 12 months, we have seen a frenzy of licensing deals:

- News Corp (Wall Street Journal)2

- Axel Springer (Business Insider)3

- Dotdash Meredith (People, Better Homes)4

- Stack Overflow

OpenAI is paying hundreds of millions of dollars to “license” this data retroactively.

Why this creates a monopoly:

If the courts rule that you need a license to train AI, OpenAI wins. Why? Because they are the only ones rich enough to afford the licenses.

A startup in a garage cannot pay News Corp $250 million. They cannot pay Reddit $60 million a year.

If “Fair Use” dies, Open Source AI dies with it. Llama 4 (Meta) becomes illegal because Mark Zuckerberg didn’t pay for the books. Mistral becomes illegal.

We are heading toward a future where only the “Big Three” (Microsoft/OpenAI, Google, Amazon) are legally allowed to be smart, because they are the only ones who paid the “Data Rent.”

4. The “Clean Data” Myth

You might ask: “Why don’t they just train on public domain data? Or create ‘Clean’ models?”

Because “Clean Data” is a myth.

The internet is a soup. You cannot separate the “Copyrighted” water from the “Public Domain” water.

- Is a Reddit comment copyrighted?

- Is a blog post from 2004 copyrighted?

- Is code on GitHub with no license file copyrighted?

To build a “Clean Model,” you would need to filter a petabyte of text, line by line, checking the provenance of every sentence. It is physically impossible.

Adobe tried this with Firefly (training only on Stock images). It worked for images. But for reasoning—which requires reading the entire messy internet—it is impossible.

If the courts demand “Clean Data,” they are demanding a model that is lobotomized.

5. The Rise of “Data Havens”

So, what happens if the US Supreme Court kills Fair Use in 2026?

The industry won’t stop. It will move.

We will see the rise of “Data Havens.”

Just as companies move money to the Cayman Islands to avoid taxes, AI companies will move their training clusters to jurisdictions with loose copyright laws.

- Japan has already passed laws stating that training AI is not copyright infringement, regardless of profit.

- Israel and Singapore are eyeing similar “AI Safe Harbor” laws to attract talent.

We will see a bifurcated internet:

- US/EU Models: Expensive, dumber, licensed, compliant. (“Claude Enterprise”).

- Offshore Models: Smart, cheap, trained on everything, hosted in Tokyo or Tel Aviv. (“DarkGPT”).

American companies will be stuck using the “Dumb but Legal” models, while the rest of the world uses the “Smart but Illegal” ones. It will be a reverse technology embargo.

6. The Verdict: The Free Lunch is Over

Whether it happens via a court ruling or a settlement, one thing is clear: Data is no longer free.

The era of scraping the web without consequence is over.

- Creators are installing “poison pills” (like Nightshade) to break models that scrape their art.

- Publishers are blocking crawlers via robots.txt (and suing those who ignore it).

- platforms like Reddit and X are closing their APIs.

The cost of training a frontier model is going to skyrocket, not just because of chips, but because of Royalties.

If you are an AI founder, you need to ask yourself: “Is my model legal?”

If your answer is “I hope so,” you are sitting on a time bomb.

The smartest move in 2026?

Don’t build your own model. Use the API of the giant who can afford the lawsuit.

Let Sam Altman go to court. You just build the app.