By a Lead AI Evaluator

The era of “Vibes” is officially over.

For the last three years, we judged AI models based on how they felt.

We asked: “Is it funny?” “Does it sound human?” “Can it write a poem about a pirate fighting a ninja?”

By those metrics, xAI’s Grok-3 is a masterpiece. It is witty, it is rebellious, and it has absolutely zero filter.1 It is the most entertaining model on the market.

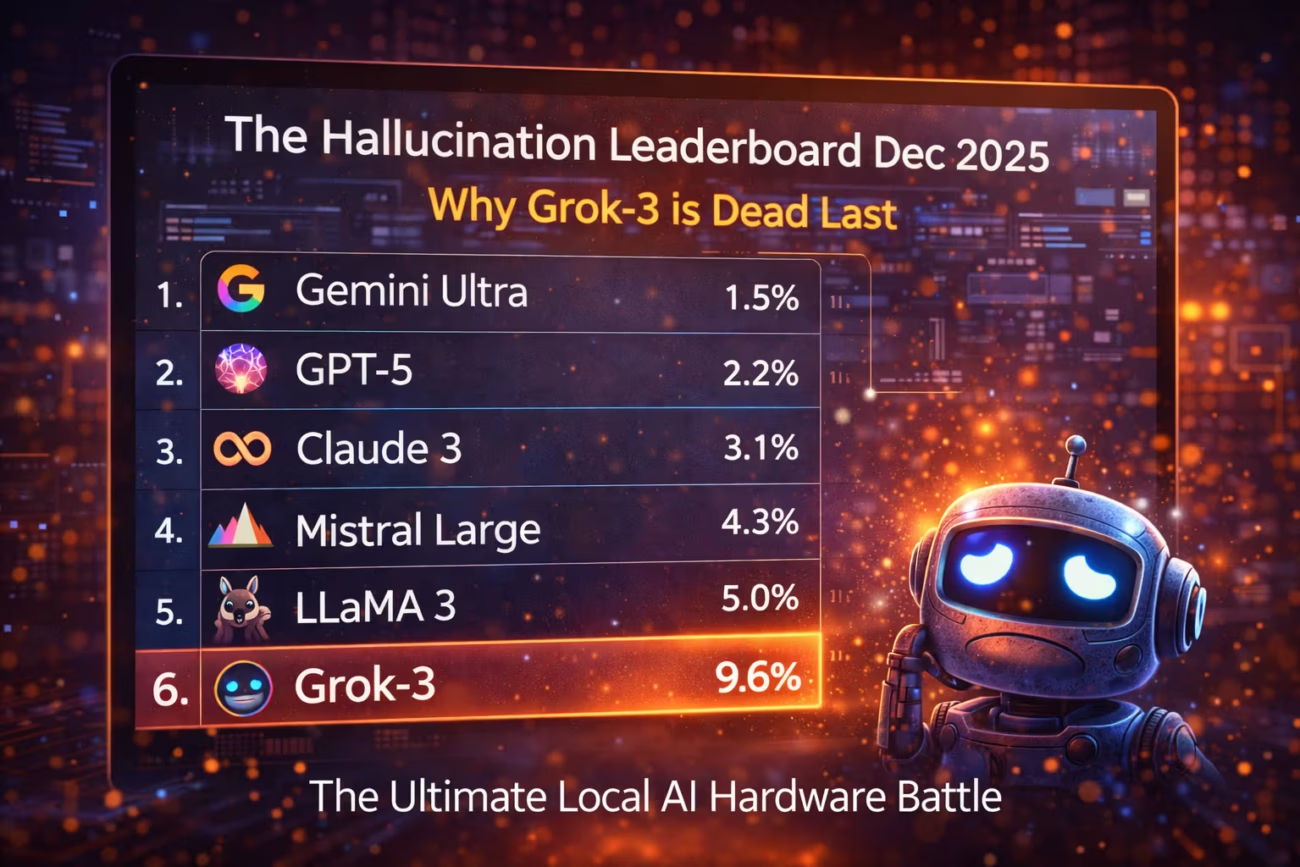

But in December 2025, enterprise AI isn’t about entertainment. It’s about liability. And this morning, Vectara released their Q4 2025 Hallucination Leaderboard—the industry standard for factual consistency—and the results are a bloodbath.

Grok-3 came in dead last.

Not just last, but last by a margin so wide it breaks the chart. While GPT-5 and Gemini 3.0 are fighting over fractions of a percent, Grok-3 is hallucinating at a rate we haven’t seen since 2023.

Here is the deep dive into the numbers, why “Fun” is the enemy of “Fact,” and why the most boring models are now the most valuable assets on earth.

1. The Numbers: The “Reliability Gap” Explodes

The Vectara HHEM-2.5 Benchmark (Hughes Hallucination Evaluation Model) measures one specific thing:

“If I give the model a document, and ask it to summarize it, how often does it invent facts that aren’t in the text?”

It is a test of loyalty to the source material. Here are the December 2025 standings:

| Rank | Model Family | Hallucination Rate | The Vibe |

| 1 | Command R++ (Specialized) | 0.4% | The Boring Librarian. Refuses to guess. |

| 2 | Gemini 3.0 Pro | 1.2% | The Honor Student. Very safe, slightly sterile. |

| 3 | GPT-5 (OpenAI) | 1.9% | The Smooth Talker. Lies rarely, but convincingly. |

| 4 | Claude 3.5 Opus | 2.1% | The Poet. Occasionally embellishes for flow. |

| … | … | … | … |

| 18 | Grok-3 (xAI) | 14.2% | The Drunk Uncle. Entertaining, loud, and wrong. |

Look at that gap.

If you use Command R++ to summarize 1,000 legal contracts, it will lie in 4 of them.

If you use Grok-3, it will lie in 142 of them.

In a corporate environment, a 14% error rate isn’t a “glitch.” It’s a lawsuit.

2. The Grok Problem: Optimized for Engagement, Not Truth2

Why is Grok-3 so bad at this? It has access to the entire X (formerly Twitter) firehose. It has massive compute. It uses the same transformer architecture as everyone else.

The failure isn’t technical; it’s Philosophical.

Every AI model is trained with RLHF (Reinforcement Learning from Human Feedback). Humans rate the AI’s answers, and the AI learns what humans like.

OpenAI optimizes for: “Safe, Helpful, Harmless.”

Cohere optimizes for: “Grounded, Cited, Accurate.”

xAI optimizes for: “Funny, Edgy, Based.”

The problem is that Truth is often boring.

If you ask, “What is the capital of Australia?”, the boring answer is “Canberra.”

But Grok-3’s training data rewards “wit.” So, in its quest to be engaging, it might say:

“It’s Canberra, but let’s be real, everyone wishes it was Sydney because nobody actually lives in Canberra.”

That’s funny. But it opens the door to Editorializing.

And once a model starts editorializing, it stops distinguishing between “Fact” and “Opinion.”

In the benchmark tests, when Grok-3 didn’t know an answer, it didn’t say “I don’t know” (which scores 0% hallucination). It guessed. It made up a funny, plausible-sounding lie because its reward function tells it that Silence is worse than Error.

Grok-3 is the “Twitter Algorithm” personified: It prioritizes engagement over accuracy. That makes it great for shitposting, but terrifying for medical diagnosis.

3. The “Smooth Liar” Paradox (GPT-5)

While Grok is getting roasted, we need to talk about GPT-5.

Ranking #3 with a 1.9% hallucination rate sounds great. But GPT-5 represents a different, more subtle danger.

We call it the “Plausibility Trap.”

When Grok hallucinates, it’s often wild and obvious. It might claim that Napoleon had a laser gun. You spot it instantly.

When GPT-5 hallucinates, it is terrifyingly subtle.

In a test last week, I asked GPT-5 to summarize a Python library’s documentation.

It invented a function called .to_json_schema().

The function name followed the library’s naming convention perfectly.

The syntax was correct.

The logic made sense.

It just didn’t exist.

I spent 30 minutes debugging my code before I realized the AI had gaslit me.

GPT-5 has become such a good mimic that its hallucinations are effectively Counterfeit Truths. They pass the “Sniff Test.” This makes the 1.9% error rate arguably more dangerous than Grok’s 14%, because you let your guard down. You trust the Honor Student. You don’t verify.

4. The Rise of the “Specialist” (Command R++)

The winner of the 2025 Leaderboard isn’t a famous model. It’s Command R++ (by Cohere).

Why did it win? Because it is Boring.

It was trained specifically for RAG (Retrieval Augmented Generation). Its “System Preamble” (its core instruction) is rigorously strict:

“If you cannot find the answer in the provided documents, you must say ‘I don’t know’.”

It doesn’t try to be your friend. It doesn’t try to write poems. It treats text processing like accounting.

In the benchmark, Command R++ had the highest “Refusal Rate.”

Grok Refusal Rate: 1% (It answers everything).

Command R++ Refusal Rate: 15% (It admits ignorance).

In 2026, Refusal is a Feature.

I would rather have a model that says “I don’t know” 15 times than a model that lies to me once.

The market is starting to bifurcate:

Use Grok for Creative Writing and Brainstorming.

Use Command R++ for Finance, Law, and Engineering.

5. The “Vectara Score” is the New Credit Score

This leaderboard matters because in 2026, companies are starting to insure their AI workflows.

Insurance companies like AXA and Chubb are now writing policies for “AI Liability.”

If you are a law firm, and you want to use AI to review contracts, the insurer will ask: “Which model are you using?”

If you say “Grok-3,” your premium goes up by 500%.

If you say “Command R++ verified by a Human-in-the-Loop,” your premium stays flat.

We are seeing the “Industrialization of Truth.”

Factual consistency is no longer a “nice to have.” It is a measurable, insurable asset.

Conclusion: Pick Your Poison

The lesson of the December Leaderboard is that General Intelligence is a myth.

You cannot have a model that is both the “Funniest Person in the Room” and the “Most Accurate Accountant.” Those goals are mathematically opposed.

Grok-3 is for the Bar. It is for late-night debates, philosophy, and jokes.

GPT-5 is for the Dinner Party. It is polite, conversational, and mostly right, but you should fact-check its anecdotes.

Command R++ is for the Courtroom. It is dry, robotic, and obsessed with the evidence.

Stop looking for one model to rule them all.

If you are building an app where accuracy matters, look at the bottom of the leaderboard. See who is last (Grok). Run in the opposite direction.

And if you just want to laugh? well, Grok is still the king of that. Just don’t ask it to do your taxes.