By a Creative Director

I sat in a marketing review yesterday where a junior designer presented a mood board generated entirely by DALL-E 4.

It was technically perfect. The hands had five fingers. The text was legible. The lighting was balanced.

And I hated every single pixel of it.

It had that unmistakable “OpenAI Sheen”—a plastic, safe, hyper-saturated gloss that screams, “I was made by a machine that is terrified of offending anyone.” It looked like a stock photo from a dentist’s waiting room in purgatory.

Then, I opened my laptop, fired up Flux Pro (Local), and generated the exact same prompt.

The result was gritty. The lighting was harsh. The skin had pores. It looked like a photograph taken by a human being who had experienced sadness.

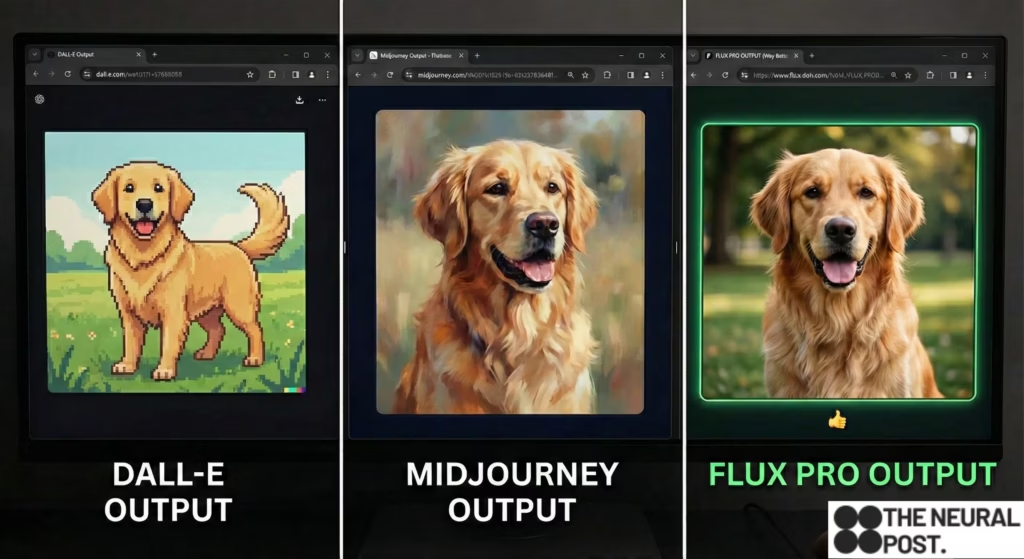

In 2026, the image generation war has split into three distinct camps. We have the Corporate Safe Space (DALL-E), the Aesthetic Walled Garden (Midjourney), and the Open Frontier (Flux).

If you are just making PowerPoint slides, use DALL-E. But if you are an artist? You need to understand why the “raw” model is eating the “polished” ones for lunch.

1. DALL-E 4: The “HR Department” of AI

Using DALL-E 4 feels like trying to paint while a Compliance Officer holds your hand.

The Tech: DALL-E 4 is undeniably smart. It understands complex spatial instructions better than anyone. If you say “A red cube on top of a blue sphere next to a green pyramid,” DALL-E gets it right 100% of the time.

The Problem: It lies to you.

When you type a prompt into ChatGPT/DALL-E, it doesn’t send your words to the model. It sends them to a “Safety Rewriter” first.

- You type: “A photo of a tired doctor.”

- DALL-E Rewrites: “A diverse, uplifting portrait of a medical professional in a well-lit hospital environment, showcasing resilience and hope.”

It sanitizes the emotion. It scrubs the grit. It forces a “Global Corporate Aesthetic” onto everything.

We call this “The Vibe Lobotomy.”

For corporate comms, this is a feature. For storytelling, it is death. You cannot make art if the tool refuses to depict the messy reality of the human condition.

2. Midjourney v7: The Beautiful Trap

Midjourney v7 is the seductive option.

It is, without a doubt, the most naturally beautiful model. You can type “Potato” and it will give you a potato that looks like it was painted by Rembrandt.

The Aesthetic: Midjourney has an opinion. It loves dramatic lighting. It loves teal and orange. It loves volumetric fog.

It makes everything look epic.

The Problem: It is impossible to turn off the “Midjourney Filter.”

If I need a raw, amateur-looking photo for a documentary project, Midjourney struggles. It insists on making it look “good.” It insists on perfect composition.

It is a Walled Garden. You cannot run it locally. You cannot fine-tune it on your own style. You are renting Midjourney’s taste, and eventually, all your work starts to look like everyone else’s work.

3. Flux: The “Linux” of Art

Then there is Flux (by Black Forest Labs).1

Flux doesn’t care about your feelings. It doesn’t care about “safety” (mostly). It just renders the physics of light.

Why it Wins:

- Zero “Vibe Injection”: Flux has no personality. If you ask for a “boring photo,” it gives you a boring photo. It doesn’t try to make it “epic.” This neutrality is the ultimate power because it allows you to supply the style.

- LoRAs (The Secret Weapon): Because Flux is open weights, the community has built thousands of LoRAs (Low-Rank Adaptations).2 These are tiny files you plug into the model to teach it specific concepts.

- Want the style of 1990s Polaroid film? Plug in the Polaroid LoRA.

- Want the specific character design of your brand mascot? Train a LoRA on 10 images, and Flux learns it perfectly.

You cannot do this with DALL-E. You cannot do this with Midjourney.

With Flux, I own the model. I can run it on my studio’s GPU server. No internet connection required. No monthly fee. No “Safety Rewriter” changing my prompt.

4. The Professional Workflow (The “Sandwich”)

So, how do professional studios actually work in 2026? We don’t use just one. We use the “Flux Sandwich.”

Step 1: The Bones (Flux)

We use Flux to generate the base composition. We use “ControlNet” (a tool to lock poses) to ensure the layout is exactly what the client sketched. We get the anatomy and the lighting physics right.

Step 2: The Vibe (Midjourney – Image-to-Image)

We take that raw Flux render and feed it into Midjourney with a low “denoising strength.” We let Midjourney apply its “beauty filter” over the top, adding texture and color grading, but keeping the composition locked.

Step 3: The Fix (Photoshop AI)

We use Adobe’s Firefly (which is safe and licensed) to fix the small details—branding logos, hands, text.

Conclusion: Control is the Only Metric

The debate between DALL-E and Midjourney is a distraction. They are consumer toys. They are “Text-to-Image” slot machines.

Flux is a professional tool.3 It is a Rendering Engine.

It requires more work. You have to install python libraries. You have to manage VRAM. You have to understand what a “scheduler” is.

But the reward is Control.

When you are building a brand, you don’t want the AI to be “creative.” You want it to be obedient.

DALL-E thinks it’s an artist. Flux knows it’s a brush.

And I will always choose the brush.

Next Step: Would you like me to create a “LoRA Training Guide” showing how to teach Flux your own specific art style using 10 images?