By a Prompt Engineer

I fired OpenAI o1 this morning.

Don’t get me wrong. It’s a brilliant model. It solves riddles that make GPT-4o look like a golden retriever. It can write code that actually compiles on the first try. It is the “Smartest” model in the world.

It is also bankrupting my startup.

At $60 per million tokens, using o1 for everyday tasks is like commuting to work in a helicopter. It’s cool, but the unit economics are insane.

But here is the secret that OpenAI (and Google) doesn’t want you to know: “Reasoning” isn’t magic. It’s just a script.

The “hidden chain of thought” that o1 uses? It’s just the model talking to itself before it talks to you. It is generating intermediate tokens to process the logic, and then hiding them from the final output.

And guess what? You don’t need to pay $60 for that. You can force Claude 3.5 Haiku (which costs pennies) or Llama 3 (which is free locally) to do the exact same thing.

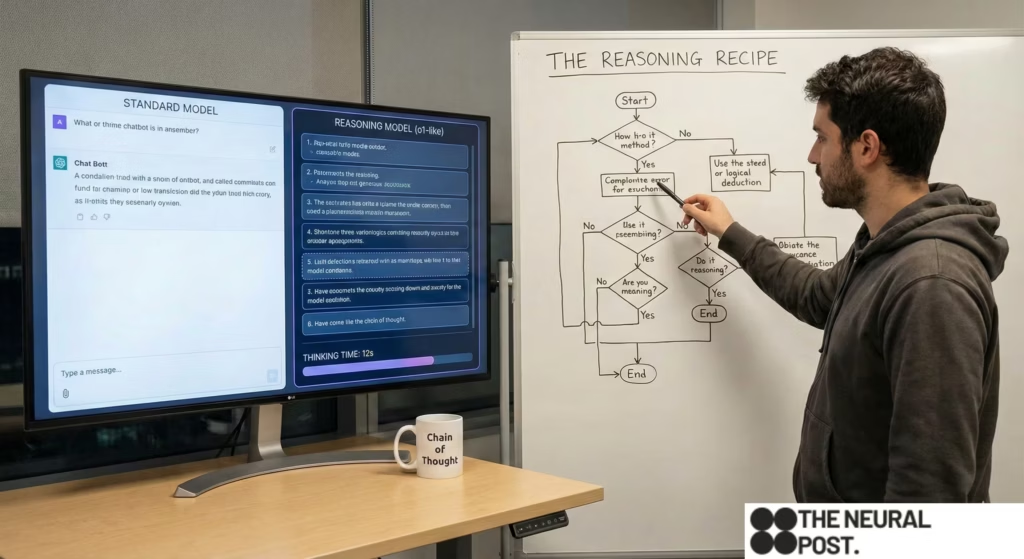

We call it the “Reasoning Recipe.” It is the difference between a model that hallucinates and a model that thinks.

Here is how to turn a dumb, cheap model into a smart, slow one.

The Theory: System 1 vs. System 2

To understand the recipe, you have to understand how LLMs work.

By default, LLMs use System 1 thinking (Fast, Intuitive). They predict the next word immediately.

- User: “What is 123 * 45?”

- LLM: “5535” (It guesses based on pattern matching).

To get the right answer, you need System 2 thinking (Slow, Deliberative).

- System 2: “Okay, 100 * 45 is 4500. 20 * 45 is 900. 3 * 45 is 135. Add them up. 4500 + 900 + 135 = 5535.”

The “Reasoning Models” (o1, Gemini 2.0 Thinking) are just standard models forced into System 2 mode. They are forced to output the “scratchpad” work before the final answer.1

The Recipe: The <thinking> Loop

You can implement this in your system prompt right now. Whether you are using the API or just chatting, this structure forces the model to slow down.

I paste this into the System Prompt of every “dumb” model I use.

The “Inner Monologue” Script

Markdown

You are a deliberative reasoning engine. You do not give answers immediately.

You must “think” before you speak.

Follow these steps for every user query:

1. **Deconstruct:** Break the user’s query down into its core components. What is being asked? What are the constraints?

2. **Draft:** Create a rough plan or a draft solution in your scratchpad.

3. **Critique:** Look at your draft. Is it wrong? Are there edge cases? Does it violate any safety or logic constraints?

4. **Refine:** Fix the errors in the draft.

5. **Finalize:** Output only the final, polished answer.

**FORMATTING RULE:**

You must output your thinking process inside <thinking> tags.

You must output your final answer inside <answer> tags.

Why This Works (The “Token Tax”)

When you run this script on Claude 3.5 Haiku, something magical happens.

User: “Write a Python script to scrape LinkedIn that avoids detection.”

Standard Haiku: “Here is a script using Selenium…” (This is bad. It will get banned instantly. It’s a System 1 guess).

Reasoning Haiku (with the Recipe):

XML

<thinking>

User wants a LinkedIn scraper.

Constraint: Avoid detection.

Critique: Selenium is easily detected by anti-bot measures. Standard headers will fail.

Refinement: I need to use a headless browser with stealth plugins, or an API wrapper.

Better approach: Suggest using the official API to avoid ToS violation, or use ‘playwright-stealth’.

Let’s draft the code using Playwright with stealth modifications…

Wait, LinkedIn has strict legal scraping protections (HiQ vs LinkedIn). I should warn the user.

</thinking>

<answer>

Here is a robust approach using Playwright with stealth plugins, but please note the legal risks… [Code follows]

</answer>

The answer is 10x better. It caught the edge cases. It considered the anti-bot measures.

Why? Because it “bought time.”

Intelligence in LLMs is a function of Token Volume.

The more tokens the model generates about the problem, the smarter the final answer gets.

By forcing it to write 200 words of <thinking>, you are essentially giving it “CPU cycles” to process the logic.

Yes, you pay for those 200 tokens. That is the “Reasoning Tax.”

But paying for 200 tokens of Haiku is still 95% cheaper than paying for 1 token of o1.

The “Haiku-Chain” (Poor Man’s o1)

If you are building an app, you can take this a step further.

You don’t want the user to see the messy <thinking> tags. It looks unprofessional.

So, you use a regex parser in your code.

- Send the prompt to Haiku.

- Haiku generates the <thinking> and the <answer>.

- Your app strips out everything between <thinking> and </thinking>.

- You show the user only the <answer>.

Congratulations. You just built o1.

From the user’s perspective, they asked a hard question, the app paused for 3 seconds (while Haiku generated the thinking tokens), and then it delivered a brilliant answer.

When to Use This (And When Not To)

This recipe isn’t for everything.

DO NOT use it for:

- “Hello.”

- “What is the capital of France?”

- “Turn on the lights.”

These are System 1 tasks. Adding reasoning just adds latency and cost.

DO use it for:

- Coding: “Debug this React component.” (The model needs to trace the state).

- Math: “Calculate the LTV of this customer cohort.”2

- Creative Strategy: “Critique this marketing email.”

The “Negative Constraints” Trick

The most powerful part of the <thinking> block is that it allows the model to self-correct.

In my prompts, I often add a specific instruction to the thinking phase:

“In your <thinking> block, explicitly list 3 reasons why your first idea might be wrong.”

This forces the model to be adversarial against itself.

If you ask standard ChatGPT: “How do I invest $10k?” it gives generic advice.

If you force it to critique itself:

- Thinking: “My first idea was S&P 500. Why might that be wrong? 1. The market is at an all-time high. 2. The user might need the money soon. 3. Inflation is high.”

The final answer becomes nuanced. It becomes “Senior” advice, not “Junior” advice.

Conclusion: You Are the Architect

The “Reasoning Model” hype is mostly marketing.

OpenAI and Google are just wrapping this “Chain of Thought” process into a black box and charging you a premium for it.

Don’t buy the black box. Build your own.

Take the cheapest, fastest model you can find (like Haiku or Gemini Flash), give it a “Reasoning Recipe,” and watch it outperform models that cost 50x as much.

Intelligence isn’t just about the size of the brain (parameters). It’s about how much time you take to think.

Force the model to pause. Force it to sweat.

The results are worth the wait.