By an Open-Source Purist

There is a strange phenomenon happening in the tech world right now. Mark Zuckerberg, the man who invented the surveillance capitalism business model, the man who built the walled garden of Facebook, is being hailed as the “Robin Hood” of Artificial Intelligence.

Walk into any developer meetup in San Francisco or London, and you will hear the same narrative:

“OpenAI and Google are the bad guys. They are closed. They are hoarding the tech. But Meta? Meta is the good guy. They gave us Llama. They are saving Open Source.”

I have been a Linux kernel contributor for fifteen years. I have the “Open Source” definition tattooed on my brain (and figuratively on my heart). And I am here to tell you that this narrative is the greatest magic trick in the history of Silicon Valley marketing.

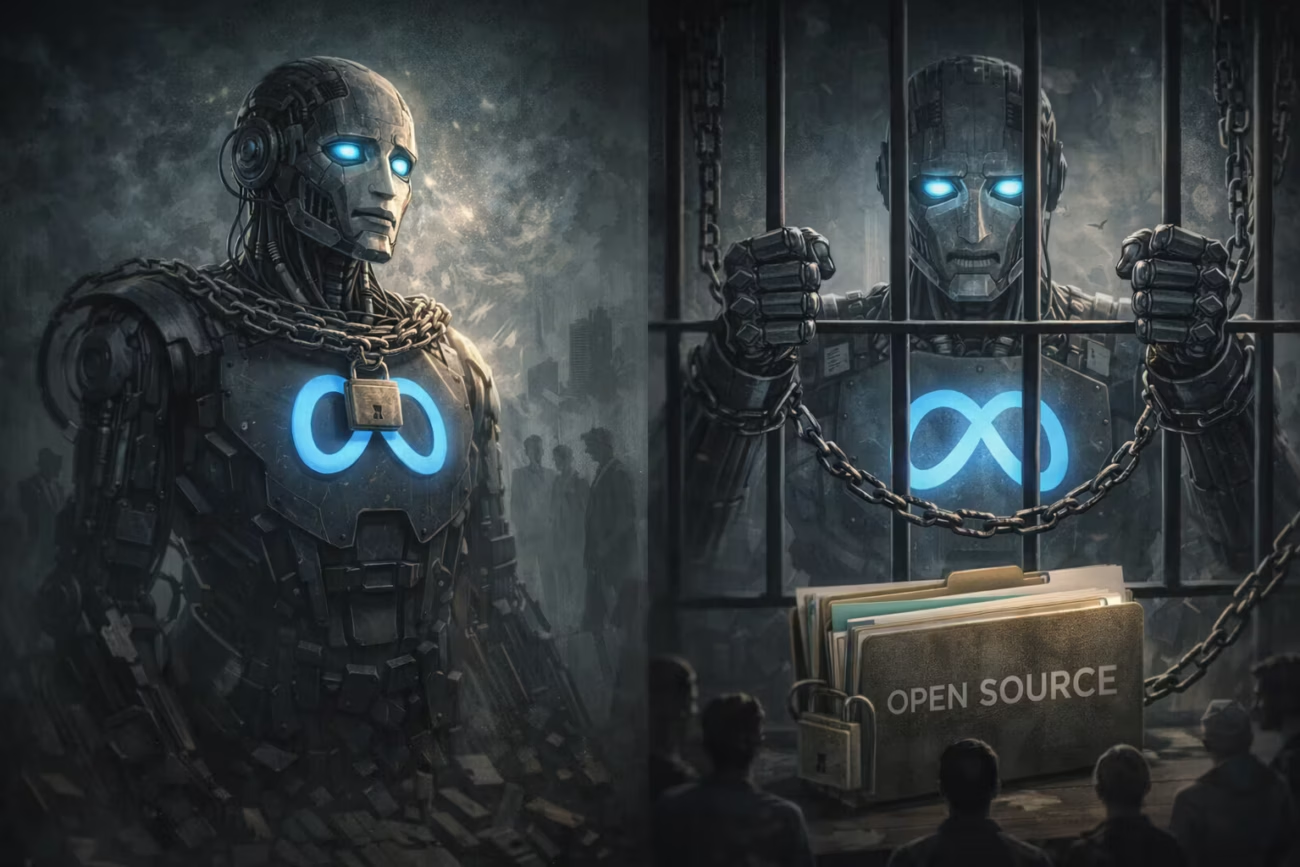

Meta is not “saving” Open Source. They are redefining it to suit their corporate interests, and we are all swallowing the bait.

Llama 3, Llama 4, and whatever comes next are not Open Source. They are Open Weights. And the difference between those two terms is not just semantics; it is the difference between owning your house and renting it from a landlord who hasn’t decided to evict you yet.

Here is why the “Open AI” revolution is a lie, and why building your startup on top of the Llama stack might be a fatal mistake.

1. The Coca-Cola Recipe Problem

To understand why Llama isn’t Open Source, you have to understand how modern AI is made.

Creating an LLM involves three ingredients:

- The Code: The Python scripts that define the neural network architecture.

- The Data: The trillions of tokens of text scraped from the internet.

- The Weights: The final, compiled binary file that results from months of training.

Meta gives us The Code (which is trivial; anyone can write a Transformer architecture) and The Weights (the final product).

They do not give us The Data.

In the software world, this is the equivalent of Coca-Cola selling you a bottle of soda and saying, “This is Open Source because you can drink it, and you can even analyze the liquid in a lab.”

But you can’t make Coca-Cola. You don’t have the recipe. You don’t know the ratio of sugar to secret ingredients. You are entirely dependent on the factory to keep producing it.

True Open Source (as defined by the Open Source Initiative or OSI) requires reproducibility. If I cannot download the dataset and re-train the model myself to verify the results, it is not Open Source. It is “Freeware.”

Why does this matter?

Because without the data, we don’t know what the model knows. We don’t know if it was trained on copyrighted books (it was). We don’t know if it was trained on biased hate speech (it was). We are treating the model as a “Black Box” that we are allowed to play with, but never fully understand.

If Linux worked this way—if Linus Torvalds gave us the kernel binary but refused to show us the source code—the internet as we know it would not exist. We wouldn’t be able to patch security holes. We wouldn’t be able to fork it. We would be serfs on Linus’s land.

With Llama, we are serfs on Zuckerberg’s land.

2. The “700 Million User” Trap

Let’s look at the license.

Real Open Source licenses (MIT, Apache 2.0, GPL) are famous for one thing: Freedom.

You can use Linux to run a hospital. You can use Linux to run a nuclear missile. You can use Linux to build a competitor to Linux. The author cannot stop you. That is the price of freedom.

Now, look at the Llama Community License. It has a poison pill buried in the fine print.

“If, on the Llama 3 Release Date, the monthly active users of the products or services made available by or for Licensee… is greater than 700 million monthly active users in the preceding calendar month, you must request a license from Meta.”

Translation: “You are free to use this, unless you become successful enough to threaten us.”

If you are a startup, this doesn’t matter today. But if you are Apple? If you are TikTok? You cannot use Llama. Meta has explicitly written a “Non-Compete” clause into their “Open” license.

Furthermore, there is an Acceptable Use Policy (AUP). You cannot use Llama for “illegal or harmful” activities.

On the surface, this sounds good. We don’t want AI generating bioweapons.

But who defines “harmful”?

Today, it means “don’t build bombs.”

Tomorrow, could it mean “don’t build a social network that competes with Instagram”?

Could it mean “don’t generate content that criticizes Meta’s political lobbying”?

When the license allows the creator to revoke your rights based on how you use the software, it is not Open Source. It is a corporate tool on a long leash.

3. Commoditize the Complement (Why Zuck is Doing This)

If Meta isn’t doing this out of the goodness of their hearts, why are they spending billions of dollars to train these models and give them away for free?

It’s a classic strategy called “Commoditizing the Complement.”

Identified by Joel Spolsky years ago, the strategy is simple: Drive the price of your competitors’ core product to zero to boost the value of your own moat.

- Google made Android open source to destroy Windows Mobile and ensure Search stayed relevant on phones.

- Meta is making Llama “open” to destroy OpenAI and Google’s margin.

OpenAI’s business model depends on selling intelligence. They need GPT-5 to be expensive so they can pay for their servers.

Meta’s business model is Attention. They make money when you scroll Instagram. They don’t need to make money on the AI model itself; they just need the AI model to make their ads better and their feeds more addictive.

By releasing Llama for free, Meta is scorching the earth. They are telling the world: “Intelligence is not a product. It is a utility. It should be free.”

This hurts OpenAI. It hurts Anthropic. It hurts Google.

But it helps Meta. It ensures that the global standard for AI development happens on the PyTorch/Llama stack—a stack that Meta controls. They are building the roads so that they can decide where the traffic flows.

We are not being “liberated” by Llama. We are being recruited as unpaid R&D interns for the Meta AI research division.

4. The “Open-Washing” of Safety

There is another, darker reason for the “Open Weights” but “Closed Data” approach: Liability.

If Meta released the dataset—the millions of pirated books, the scraped New York Times articles, the YouTube transcripts—they would be sued into oblivion. The copyright lawsuits would be endless.

By keeping the data closed but releasing the weights, they are playing a legal shell game. They are saying, “We aren’t distributing the copyrighted books! We are just distributing a mathematical abstraction of them.”

It is “Open-Washing.” They get the PR credit for being “Open,” while maintaining the secrecy required to dodge legal accountability.

Meanwhile, the actual Open Source community—projects like AllenAI’s OLMo (Open Language Model)—are struggling. OLMo releases the code, the weights, and the dataset. They are doing the hard, dangerous work of true science. But because they don’t have Meta’s GPU budget, their models aren’t as smart as Llama.

So, the developer community flocks to Llama. We choose convenience over freedom. We choose the shiny toy over the principled tool.

5. The Rug Pull Risk

What happens in 2027?

Let’s imagine that Llama 5 is the first AGI. It is powerful, dangerous, and incredibly valuable.

Do you think Mark Zuckerberg will release the weights for Llama 5?

Or will he say: “Due to safety concerns, and the changing regulatory landscape, Llama 5 will be available exclusively via the Meta Cloud API.”

We have seen this movie before.

OpenAI started as a non-profit open-source lab. Then they found something valuable, and they closed the doors.

Android started as a completely open project. Over time, Google moved all the good stuff into “Google Play Services,” leaving the open-source version (AOSP) a barren shell.

If we build our entire ecosystem on Llama—if we optimize our hardware for it, build our apps on it, train our engineers on it—we are handing Meta the kill switch for the entire industry.

6. Conclusion: Eat the Free Lunch, But Don’t Praise the Chef

Don’t get me wrong. I use Llama 3. It is a marvel of engineering. It allows me to run powerful AI on my local machine without paying OpenAI a cent. It is, functionally, a great product.

Eat the free lunch. Use the models. Build cool stuff with them.

But stop calling it “Open Source.”

Stop praising Mark Zuckerberg as the savior of digital freedom.

Stop pretending that “Open Weights” is the same thing as “Open Science.”

We are currently enjoying a subsidized period of history where a tech giant is giving us their crown jewels to hurt their rivals. It is a strategic move, not a moral one.

If we want true Open Source AI—AI that belongs to the people, AI that is reproducible, AI that cannot be revoked by a billionaire in Menlo Park—we need to support the projects that are actually doing it. We need to support OLMo. We need to support EleutherAI. We need to demand that “Open” means “Open Data.”

Otherwise, we are just trading one walled garden for another, and patting ourselves on the back for being free while we admire the new chains.